EPM for Windows performance reports | EPM-WM

EPM-W 25.8 performance report

The aim of this document is to provide data on agreed performance metrics of the Endpoint Privilege Management for Windows (EPM-W) desktop client compared to the previous release.

The content of this document should be used to provide general guidance only. The are many different factors in a live environment which could show different results such as hardware configuration, Windows configuration and background activities, 3rd party products, and the nature of the EPM policy being used.

It should be noted that during the 25.8 release we upgraded our performance benchmark infrastructure to use Windows 11 24H2 and re-ran our benchmark for 25.4 MR2 on that platform in addition to testing on 25.8. Since the infrastructure has changed, the results in this report shouldn’t be compared with older benchmarks. It’s a purely N-1 release comparison.

Performance benchmarking

Test Scenario

Tests are performed on dedicated VMs hosted in our datacenter. Each VM is configured with:

- Windows 11 24H2

- 4 Core 3.3GHz CPU

- 8GB Ram

Testing was performed using the GA release of 25.8.2.

Test Name

Quick Start policy with single matching rule

Test Method

This test involves a modified Quick Start policy where a single matching rule is added which auto elevates an application based on its name. Auditing is also turned on for the application. The application is trivial command line app and is executed once per second for 60 minutes. Performance counters and EPM-W activity logging are collected and recorded by the test.

The Quick Start policy is commonly used as a base for all of our customers and it can be applied using our Policy Editor Import Template function. It was chosen as it's our most common use case. The application being elevated is a dummy application exe, which we’ve created specifically for this testing and it terminates quickly and doesn't have a UI, making it ideal for the testing scenario.

Results

Listed below are the results from the runs of the tests on 25.8.2 and our previous release, 25.4.287.0. (Note version number increased on 25.4 due to testing infrastructure changes). Due to the nature of our product, we are sensitive to the OS and general computer activity, so some fluctuation is to be expected.

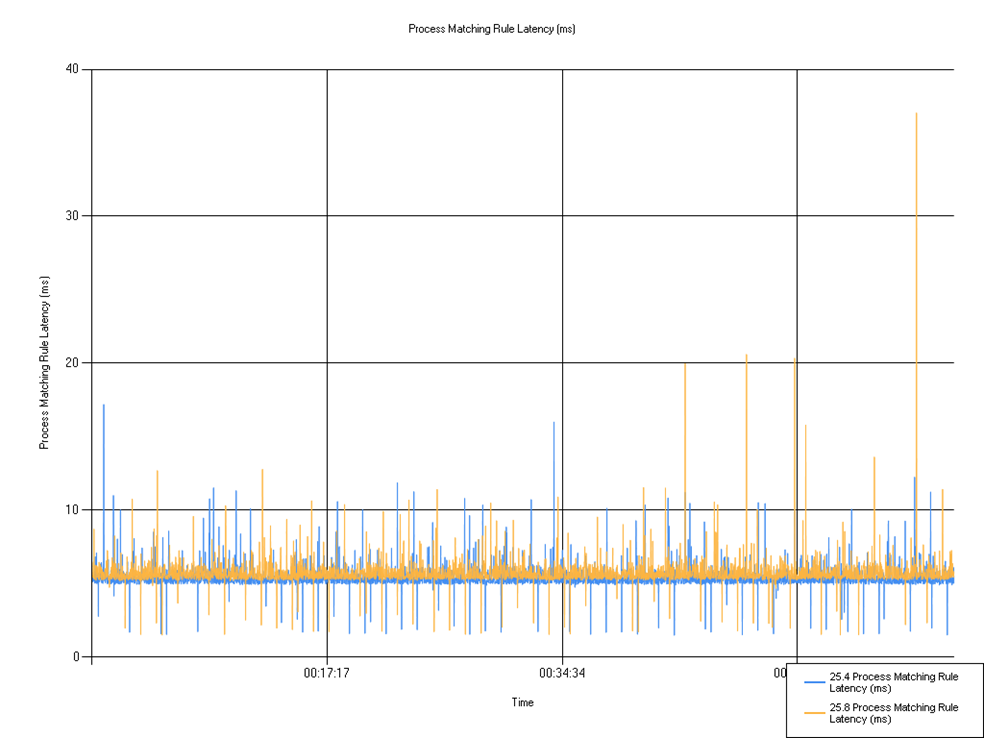

Rule matching latency

Shows the time taken for the rule to match.

Very similar pattern for both versions.

| Series | Mean | Min | Max |

|---|---|---|---|

| 25.4 Process Matching Rule Latency (ms) | 5.42 | 1.51 | 17.19 |

| 25.8 Process Matching Rule Latency (ms) | 5.74 | 1.52 | 37.03 |

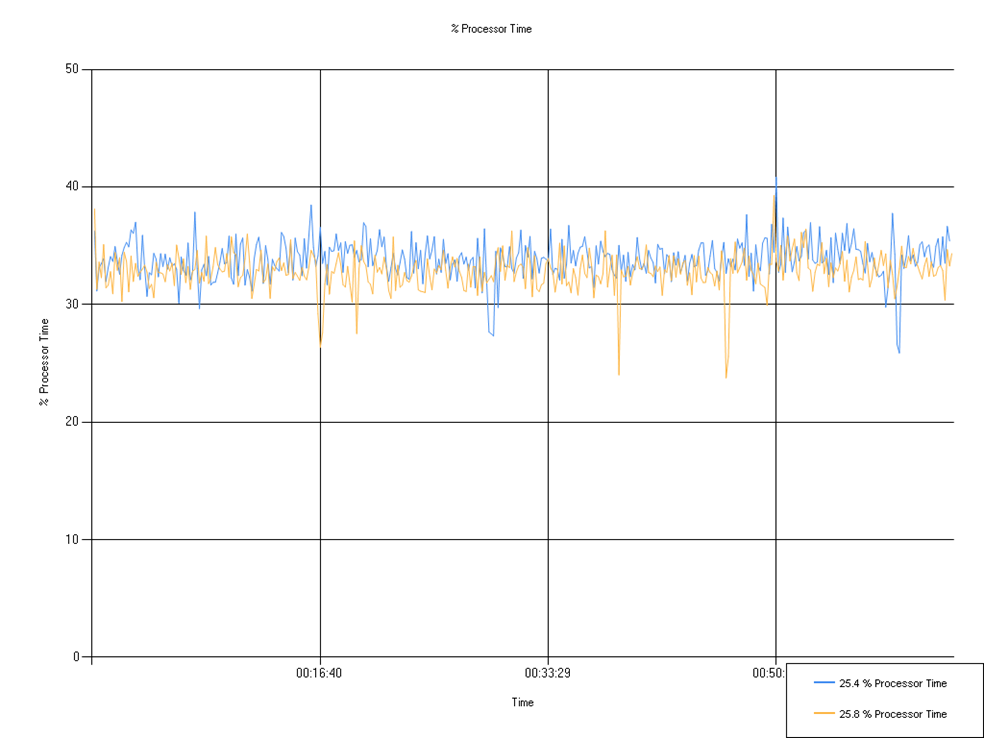

Processor time (Defendpoint)

Percentage of processor time used by the Defendpoint service.

A small but negligible decrease in % Processor time observed for 25.8.

| Series | Mean | Min | Max |

|---|---|---|---|

| 25.4 % Processor Time | 33.87 | 25.87 | 40.83 |

| 25.8 % Processor Time | 32.82 | 23.76 | 39.27 |

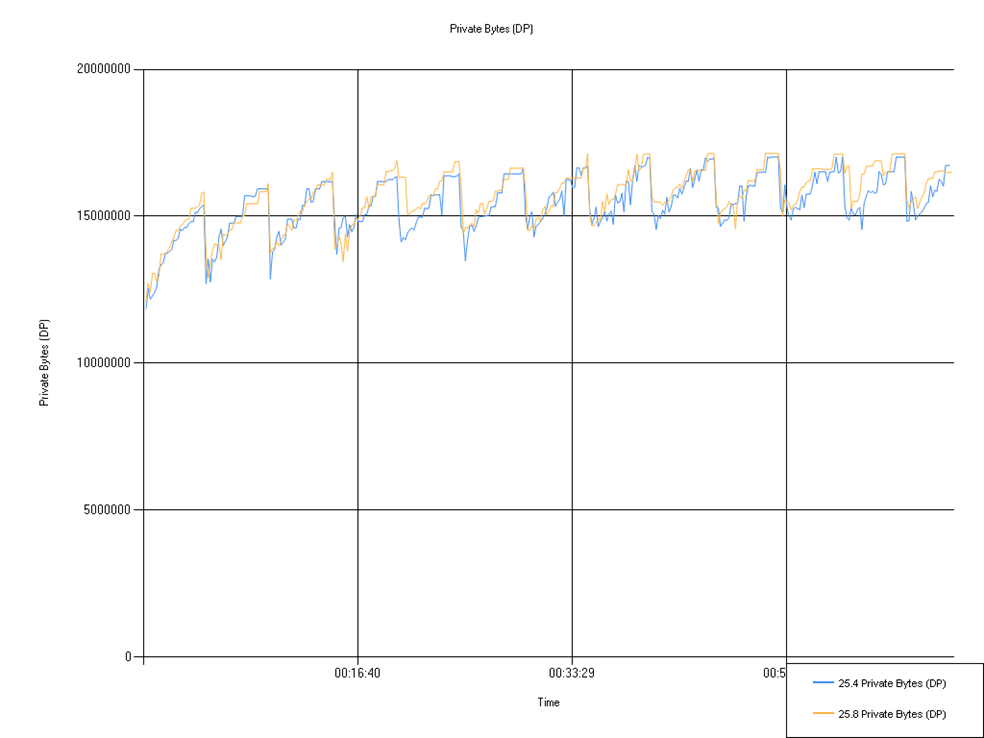

Private Bytes (Defendpoint)

Shows the whole size of the memory consumed by the Defendpoint process. Negligible change.

| Series | Mean | Min | Max |

|---|---|---|---|

| 25.4 Private Bytes (DP) | 15,444,371.30 | 11,857,920.00 | 17,018,880.00 |

| 25.8 Private Bytes (DP) | 15,674,207.72 | 12,091,390.00 | 17,137,660.00 |

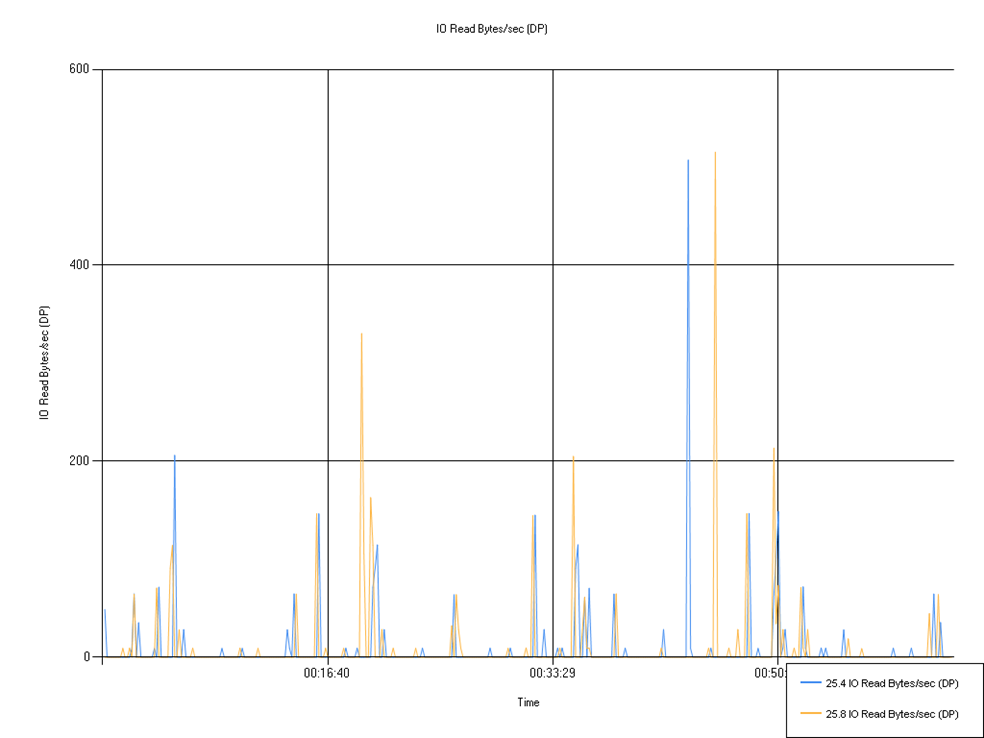

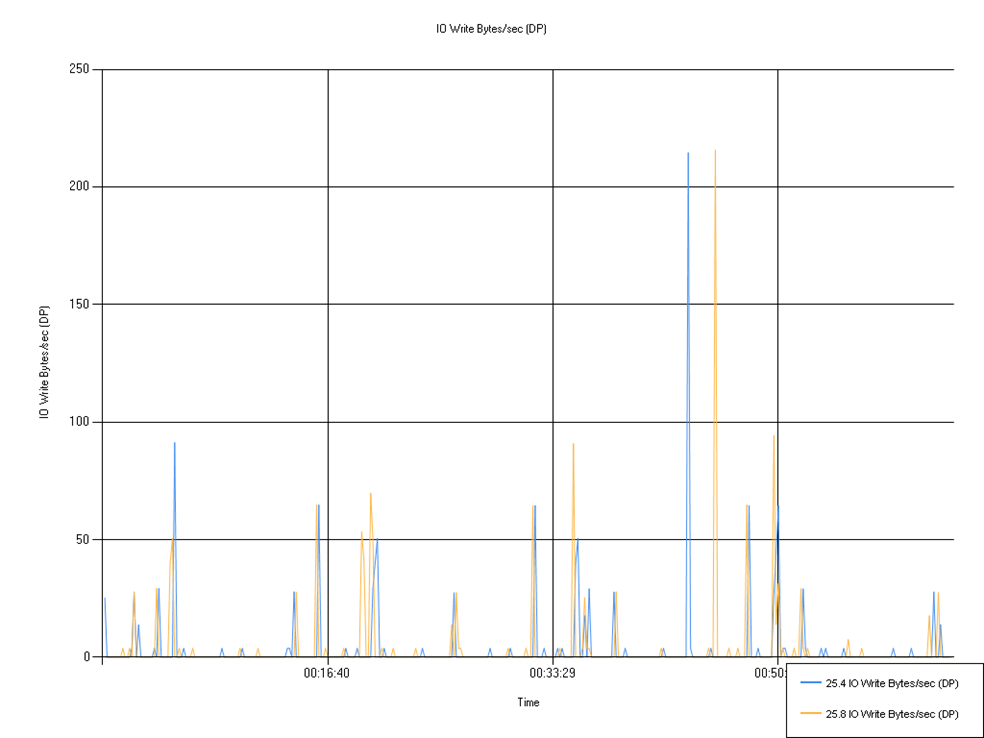

I/O read and write (Defendpoint)

Shows disk I/O used by the Defendpoint service.

No notable patterns visible in graph, though the average overall for 25.4 is higher.

IO Read Bytes/sec (DP)

| Series | Mean | Min | Max |

|---|---|---|---|

| 25.4 IO Read Bytes/sec (DP) | 8.16 | 0.00 | 507.40 |

| 25.8 IO Read Bytes/sec (DP) | 9.05 | 0.00 | 515.50 |

IO Write Bytes/sec (DP)

| Series | Mean | Min | Max |

|---|---|---|---|

| 25.4 IO Write Bytes/sec (DP) | 3.33 | 0.00 | 214.55 |

| 25.8 IO Write Bytes/sec (DP) | 3.48 | 0.00 | 215.71 |

Memory testing

For each release we run through a series of automation tests (covering Application control, token modification, DLL control and Event auditing) using a build with memory leak analysis enabled to ensure there are no memory leaks. We use Visual Leak Detector (VLD) version 2.51 which when enabled at compile time replaces various memory allocation and deallocation functions to record memory usage. On stopping a service with this functionality an output file is saved to disk, listing all leaks VLD has detected. We use these builds with automation so that an output file is generated for each test scenario within a suite.

The output files are then examined by a developer who will review the results looking for anything notable. Due to the number of automation tests, only suites that test impacted areas are ran, for example EventAuditJson would be ran if a release contained ECS auditing changes. If nothing concerning is found then the build is continued to production.

No notable issues found.

EPM-W 25.4 performance report

The aim of this document is to provide data on agreed performance metrics of the Privilege Management for Windows desktop client compared to the previous release.

The content of this document should be used to provide general guidance only. The are many different factors in a live environment which could show different results such as hardware configuration, Windows configuration and background activities, 3rd party products, and the nature of the EPM policy being used.

Performance benchmarking

Test Scenario

Tests are performed on dedicated VMs hosted in our datacenter. Each VM is configured with:

- Windows 10 22H2

- 4 Core 3.3GHz CPU

- 4GB Ram

Testing was performed using the GA release of 25.4

Test Name

Low Flexibility Quick Start policy with a single matching rule.

Test Method

This test involves a modified Low Flexibility Quick Start policy where a single matching rule is added which auto elevates an application based on its name. Auditing is also turned on for the application. The application is trivial command line app and is executed once per second for 30 minutes. Performance counters and EPM-W activity logging are collected and recorded by the test.

The Quick Start policy is commonly used as a base for all of our customers and it can be applied using our Policy Editor Import Template function. It was chosen as it's our most common use case. The application being elevated is a dummy application exe, which we’ve created specifically for this testing and it terminates quickly and doesn't have a UI, making it ideal for the testing scenario.

Results

Listed below are the results from the runs of the tests on 25.4 and our previous release, 25.2 MR. Due to the nature of our product, we are very sensitive to the OS and general computer activity, so some fluctuation is to be expected.

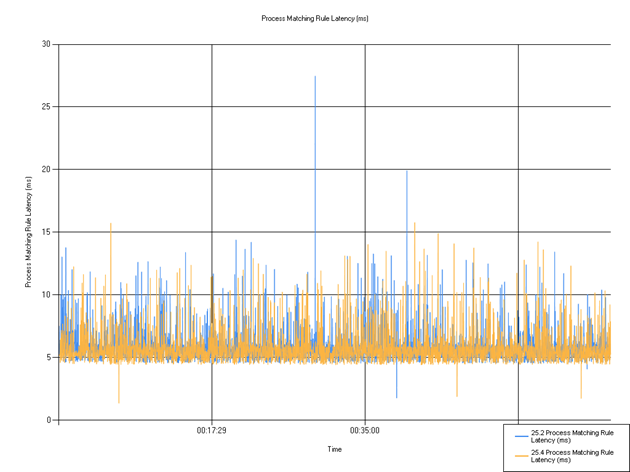

Rule matching latency

Shows the time taken for the rule to match. Some small fluctuations were observed relative to the previous release, but this change in performance should not affect customers in a significant way as it is caused by small variations in the tests that we perform.

| Series | Mean | Min | Max |

|---|---|---|---|

| 25.2 MR Process Matching Rule Latency (ms) | 5.90 | 1.78 | 27.46 |

| 25.4 Process Matching Rule Latency (ms) | 5.78 | 1.37 | 15.78 |

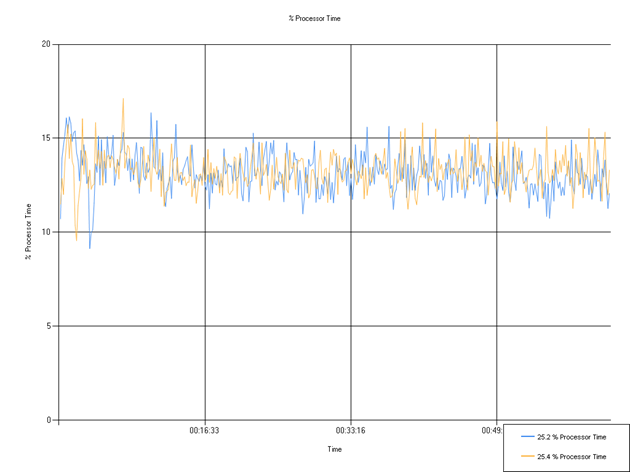

% Processor Time

Percentage of processor time used. The mean percentage remains within <1% of the previous release, suggesting no significant change in processor usage that would impact customer experience.

| Series | Mean | Min | Max |

|---|---|---|---|

| 25.2 MR % Processor Time | 13.15 | 9.14 | 16.36 |

| 25.4 % Processor Time | 13.26 | 9.55 | 17.12 |

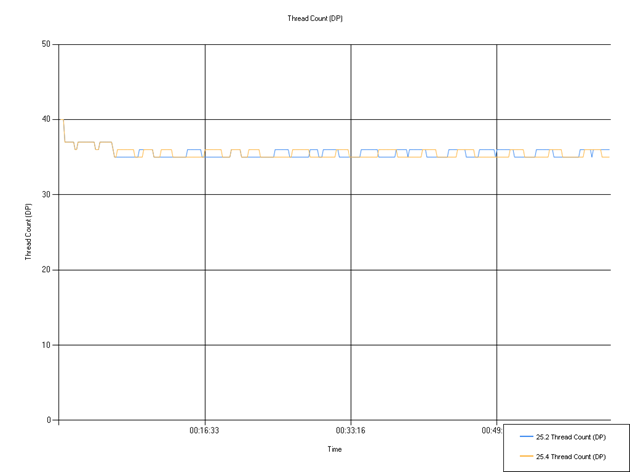

Thread Count (Defendpoint)

Number of threads used by Defendpoint. The mean has fluctuated but not outside our normal error margins for the test, while the minimum and maximum values remained unchanged. This indicates consistent threading behavior with a negligible increase in average usage that is unlikely to impact customer experience.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.8 Thread Count (DP) | 35.61 | 35.00 | 40.00 |

| 25.2 Thread Count (DP) | 35.58 | 35.00 | 40.00 |

Memory testing

For each release, we run through a series of automation tests (covering Application control, token modification, DLL control and Event auditing) using a build with memory leak analysis enabled to ensure there are no memory leaks. We use Visual Leak Detector (VLD) version 2.51 which when enabled at compile time replaces various memory allocation and deallocation functions to record memory usage. On stopping a service with this functionality an output file is saved to disk, listing all leaks VLD has detected. We use these builds with automation so that an output file is generated for each test scenario within a suite.

The output files are then examined by a developer who will review the results looking for anything notable. Due to the number of automation tests, test suites of impacted features are identified and ran. If nothing concerning is found then the build is continued to production.

In the 25.4 release, our testing uncovered no concerns.

EPM-W 25.2 performance report

The aim of this document is to provide data on agreed performance metrics of the Privilege Management for Windows desktop client compared to the previous release.

The content of this document should be used to provide general guidance only. The are many different factors in a live environment which could show different results such as hardware configuration, Windows configuration and background activities, 3rd party products, and the nature of the EPM policy being used.

Performance benchmarking

Test Scenario

Tests are performed on dedicated VMs hosted in our datacenter. Each VM is configured with:

- Windows 10 22H2

- 4 Core 3.3GHz CPU

- 4GB Ram

Testing was performed using the GA release of 25.2

Test Name

Low Flexibility Quick Start policy with a single matching rule.

Test Method

This test involves a modified Low Flexibility Quick Start policy where a single matching rule is added which auto elevates an application based on its name. Auditing is also turned on for the application. The application is trivial command line app and is executed once per second for 30 minutes. Performance counters and EPM-W activity logging are collected and recorded by the test.

The Quick Start policy is commonly used as a base for all of our customers and it can be applied using our Policy Editor Import Template function. It was chosen as it's our most common use case. The application being elevated is a dummy application exe, which we’ve created specifically for this testing and it terminates quickly and doesn't have a UI, making it ideal for the testing scenario.

Results

Listed below are the results from the runs of the tests on 25.2 and our previous release, 24.8. Due to the nature of our product, we are very sensitive to the OS and general computer activity, so some fluctuation is to be expected.

Rule matching latency

Shows the time taken for the rule to match. Some small fluctuations were observed relative to the previous release, but this change in performance should not affect customers in a significant way as it is caused by small variations in the tests that we perform.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.8 Process Matching Rule Latency (ms) | 5.71 | 1.29 | 17.86 |

| 25.2 Process Matching Rule Latency (ms) | 5.68 | 1.28 | 20.38 |

% Processor Time

Percentage of processor time used. The mean percentage remains within <1% of the previous release, suggesting no significant change in processor usage that would impact customer experience.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.8 % Processor Time | 11.55 | 7.24 | 18.64 |

| 25.2 % Processor Time | 11.49 | 7.49 | 14.54 |

Thread Count (Defendpoint)

Number of threads used by Defendpoint. The mean has fluctuated but not outside our normal error margins for the test, while the minimum and maximum values remained unchanged. This indicates consistent threading behavior with a negligible increase in average usage that is unlikely to impact customer experience.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.8 Thread Count (DP) | 35.67 | 35.00 | 40.00 |

| 25.2 Thread Count (DP) | 35.51 | 35.00 | 40.00 |

Memory testing

For each release, we run through a series of automation tests (covering Application control, token modification, DLL control and Event auditing) using a build with memory leak analysis enabled to ensure there are no memory leaks. We use Visual Leak Detector (VLD) version 2.51 which when enabled at compile time replaces various memory allocation and deallocation functions to record memory usage. On stopping a service with this functionality an output file is saved to disk, listing all leaks VLD has detected. We use these builds with automation so that an output file is generated for each test scenario within a suite.

The output files are then examined by a developer who will review the results looking for anything notable. Due to the number of automation tests, test suites of impacted features are identified and ran. If nothing concerning is found then the build is continued to production.

In the 25.2 release, our testing uncovered no concerns.

EPM-W 24.8 performance report

The aim of this document is to provide data on agreed performance metrics of the Endpoint Privilege Management for Windows (EPM-W) desktop client compared to the previous release.

The content of this document should be used to provide general guidance only. The are many different factors in a live environment which could show different results such as hardware configuration, Windows configuration and background activities, 3rd party products, and the nature of the EPM policy being used.

Performance benchmarking

Test Scenario

Tests are performed on dedicated VMs hosted in our datacenter. Each VM is configured with:

- Windows 10 22H2

- 4 Core 3.3GHz CPU

- 4GB Ram

Testing was performed using the GA release of 24.8

Test Name

Low Flexibility Quick Start policy with a single matching rule.

Test Method

This test involves a modified Low Flexibility Quick Start policy where a single matching rule is added which auto elevates an application based on its name. Auditing is also turned on for the application. The application is trivial command line app and is executed once per second for 30 minutes. Performance counters and EPM-W activity logging are collected and recorded by the test.

The Quick Start policy is commonly used as a base for all of our customers and it can be applied using our Policy Editor Import Template function. It was chosen as it's our most common use case. The application being elevated is a dummy application exe, which we’ve created specifically for this testing and it terminates quickly and doesn't have a UI, making it ideal for the testing scenario.

Results

Listed below are the results from the runs of the tests on 24.8 and our previous release, 24.7. Due to the nature of our product, we are very sensitive to the OS and general computer activity, so some fluctuation is to be expected.

Rule matching latency

Shows the time taken for the rule to match. A small increase in mean and max latency was observed, but this change in performance should not affect customers in a significant way as it is caused by small variations in the tests that we perform.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.8 Process Matching Rule Latency (ms) | 5.71 | 1.29 | 17.86 |

| 24.7 Process Matching Rule Latency (ms) | 5.64 | 1.25 | 20.64 |

% Processor Time

Percentage of processor time used. In 24.8, the maximum value increased slightly compared to 24.7, while the minimum also rose, indicating a narrower range of variation. The mean percentage remains within <1% of the previous release, suggesting no significant change in processor usage that would impact customer experience.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.8 % Processor Time | 11.55 | 7.24 | 18.64 |

| 24.7 % Processor Time | 11.74 | 6.91 | 14.47 |

Thread Count (Defendpoint)

Number of threads used by Defendpoint. In 24.8, the mean thread count increased slightly compared to 24.7, while the minimum and maximum values remained unchanged. This indicates consistent threading behavior with a negligible increase in average usage that is unlikely to impact customer experience.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.8 Thread Count (DP) | 35.67 | 35.00 | 40.00 |

| 24.7 Thread Count (DP) | 35.58 | 35.00 | 40.00 |

Memory testing

For each release, we run through a series of automation tests (covering Application control, token modification, DLL control and Event auditing) using a build with memory leak analysis enabled to ensure there are no memory leaks. We use Visual Leak Detector (VLD) version 2.51 which when enabled at compile time replaces various memory allocation and deallocation functions to record memory usage. On stopping a service with this functionality an output file is saved to disk, listing all leaks VLD has detected. We use these builds with automation so that an output file is generated for each test scenario within a suite.

The output files are then examined by a developer who will review the results looking for anything notable. Due to the number of automation tests, test suites of impacted features are identified and ran. If nothing concerning is found then the build is continued to production.

In the 24.8 release, our testing uncovered no concerns.

EPM-W 24.7 performance report

The aim of this document is to provide data on agreed performance metrics of the EPM-W desktop client compared to the previous release.

The content of this document should be used to provide general guidance only. There are many different factors in a live environment which could show different results such as hardware configuration, Windows configuration and background activities, 3rd party products, and the nature of the EPM policy being used.

Performance benchmarking

Test Scenario

Tests are performed on dedicated VMs hosted in our data center. Each VM is configured with:

- Windows 10 22H2

- 4 Core 3.3GHz CPU

- 4GB Ram

Testing was performed using the GA release of 24.7.

Test Name

Low Flexibility Quick Start policy with a single matching rule.

Test Method

This test involves a modified Low Flexibility QuickStart policy where a single matching rule is added which auto elevates an application based on its name. Auditing is also turned on for the application. The application is trivial command line app and is executed once per second for 30 minutes. Performance counters and EPM-W activity logging are collected and recorded by the test.

The QuickStart policy is commonly used as a base for all of our customers and it can be applied using our Policy Editor Import Template function. It was chosen as it's our most common use case. The application being elevated is a dummy application .exe, which we’ve created specifically for this testing and it terminates quickly and doesn't have a UI, making it ideal for the testing scenario.

Results

Listed below are the results from the runs of the tests on 24.7 and our previous release, 24.5. Due to the nature of our product, we are very sensitive to the OS and general computer activity, so some fluctuation is to be expected.

This report uses a different test methodology to previous reports, so the results aren’t comparable. However, the previous release was re-run using the new methodology to allow us to compare results in this report.

Rule matching latency

Shows the time taken for the rule to match. A small increase in mean and max latency was observed, but this change in performance should not affect customers in a significant way as it is caused by small variations in the tests that we perform.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.7 Process Matching Rule Latency (ms) | 5.64 | 1.25 | 20.64 |

| 24.5 Process Matching Rule Latency (ms) | 5.38 | 4.23 | 17.74 |

% Processor Time

Percentage of processor time used. In 24.5, we observed a single large spike which contributed to a higher max percentage value, this is not present in 24.7, resulting in a lower max. The minimum is lower and the mean was within <1% so it’s unlikely that there would be any meaningful differences for a customer.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.7 % Processor Time | 11.74 | 6.91 | 14.47 |

| 24.5 % Processor Time | 11.85 | 8.29 | 19.46 |

Thread Count (Defendpoint)

Number of threads being used by Defendpoint. 24.7 is using one more thread on average than 24.5. This new thread was short lived which results in the mean being very similar between 24.5 and 24.7.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.7 Thread Count (DP) | 35.58 | 35.00 | 40.00 |

| 24.5 Thread Count (DP) | 35.48 | 34.00 | 39.00 |

Memory testing

For each release, we run through a series of automation tests (covering Application control, token modification, DLL control and Event auditing) using a build with memory leak analysis enabled to ensure there are no memory leaks. We use Visual Leak Detector (VLD) version 2.51 which when enabled at compile time replaces various memory allocation and deallocation functions to record memory usage. On stopping a service with this functionality an output file is saved to disk, listing all leaks VLD has detected. We use these builds with automation so that an output file is generated for each test scenario within a suite.

The output files are then examined by a developer who will review the results looking for anything notable. Due to the number of automation tests, test suites of impacted features are identified and ran. If nothing concerning is found then the build is continued to production.

In the 24.7 release, our testing uncovered no concerns.

EPM-W 24.5 performance report

The aim of this document is to provide data on agreed performance metrics of the EPM-W desktop client compared to the previous release.

The content of this document should be used to provide general guidance only. There are many different factors in a live environment which could show different results such as hardware configuration, Windows configuration and background activities, 3rd party products, and the nature of the EPM policy being used.

Performance benchmarking

Test Scenario

Tests are performed on dedicated VMs hosted in our data center. Each VM is configured with:

- Windows 10 22H2

- 4 Core 3.3GHz CPU

- 4GB RAM

Testing was performed using the GA release of 24.5

Test Name

Low Flexibility Quick Start policy with a single matching rule.

Test Method

This test involves a modified Low Flexibility Quick Start policy where a single matching rule is added which auto elevates an application based on its name. Auditing is also turned on for the application. The application is trivial command line app and is executed once per second for 30 minutes. Performance counters and EPM-W activity logging are collected and recorded by the test.

The Quick Start policy is commonly used as a base for all of our customers and it can be applied using our Policy Editor Import Template function. It was chosen as it's our most common use case. The application being elevated is a dummy application exe, which we’ve created specifically for this testing and it terminates quickly and doesn't have a UI, making it ideal for the testing scenario.

Results

Listed below are the results from the runs of the tests on 24.5 and our previous release, 24.3. Due to the nature of our product, we are very sensitive to the OS and general computer activity, so some fluctuation is to be expected.

This report uses a different test methodology to previous reports, so the results aren’t comparable. However the previous release was re-run using the new methodology to allow us to compare results in this report.

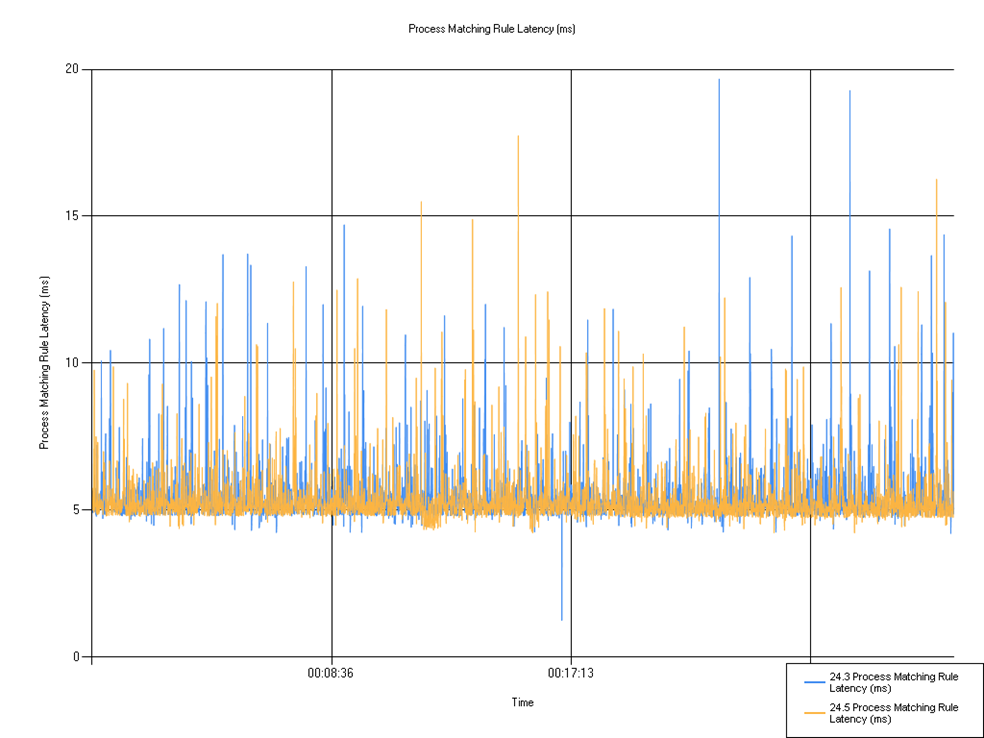

Rule matching latency

Shows the time taken for the rule to match. We can observe a small decrease in mean and max latency from 24.3 to 24.5, and a seemingly large increase in minimum latency, though on further inspection you can see that in the 24.3 run, there was only a single spike at that greatly reduced latency number, otherwise the graphs look very similar. These minor changes are unlikely to make any difference to user experiences.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.3 Process Matching Rule Latency (ms) | 5.46 | 1.25 | 19.67 |

| 24.5 Process Matching Rule Latency (ms) | 5.38 | 4.23 | 17.74 |

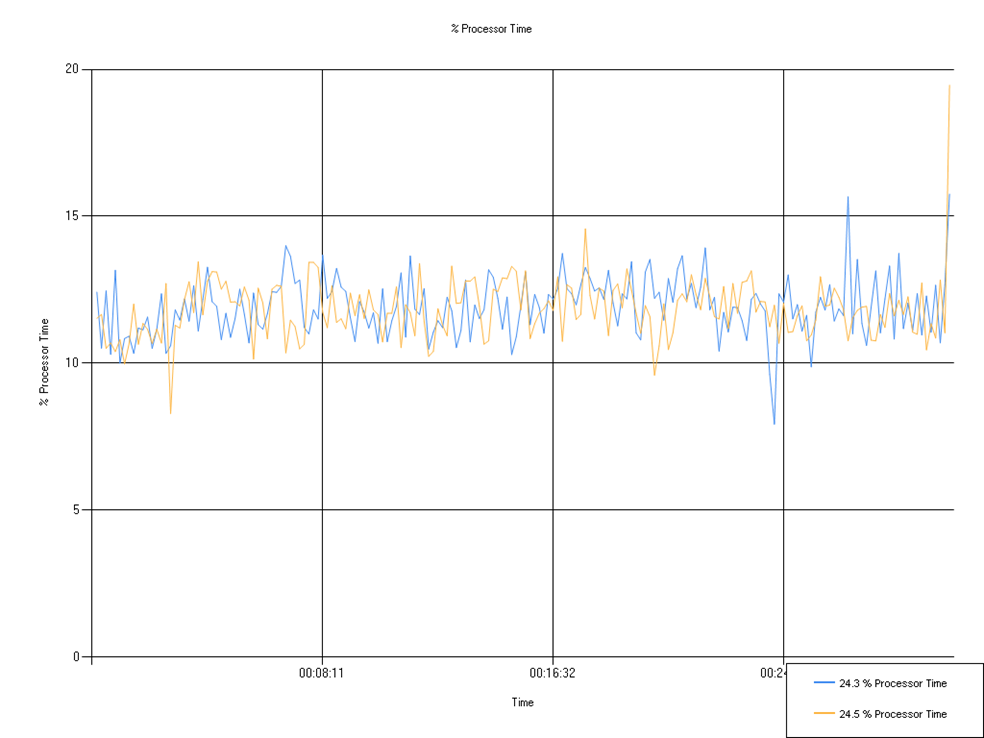

% Processor Time

Percentage of processor time used. We observed a single large spike which contributed to a higher max percentage value, but the minimum and mean were within 0.4%. It’s unlikely that there would be any meaningful differences for a customer.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.3 % Processor Time | 11.92 | 7.93 | 15.76 |

| 24.5 % Processor Time | 11.85 | 8.29 | 19.46 |

Thread Count (Defendpoint)

Number of threads being used by Defendpoint. 24.5 is using one more thread on average than 24.3.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.3 Thread Count (DP) | 34.59 | 33.00 | 38.00 |

| 24.5 Thread Count (DP) | 35.48 | 34.00 | 39.00 |

Memory testing

For each release, we run through a series of automation tests (covering Application control, token modification, DLL control and Event auditing) using a build with memory leak analysis enabled to ensure there are no memory leaks. We use Visual Leak Detector (VLD) version 2.51 which when enabled at compile time replaces various memory allocation and deallocation functions to record memory usage. On stopping a service with this functionality an output file is saved to disk, listing all leaks VLD has detected. We use these builds with automation so that an output file is generated for each test scenario within a suite.

The output files are then examined by a developer who will review the results looking for anything notable. Due to the number of automation tests, test suites of impacted features are identified and ran. If nothing concerning is found then the build is continued to production.

In the 24.5 release, our testing uncovered no concerns.

EPM-W 24.3 performance report

The aim of this document is to provide data on agreed performance metrics of the EPM-W desktop client compared to the previous release.

The content of this document should be used to provide general guidance only. There are many different factors in a live environment which could show different results such as hardware configuration, Windows configuration and background activities, 3rd party products, and the nature of the EPM policy being used.

Performance benchmarking

Test Scenario

Tests are performed on dedicated VMs hosted in our data center. Each VM is configured with:

- Windows 10 21H2

- 4 Core 3.3GHz CPU

- 8GB RAM

Testing was performed using the GA release of 24.3

Test Name

Quick Start policy with a single matching rule.

Test Method

This test involves a modified Quick Start policy where a single matching rule is added which auto elevates an application based on its name. Auditing is also turned on for the application. The application is trivial command line app and is executed once per second for 60 minutes. Performance counters and EPM-W activity logging are collected and recorded by the test.

The Quick Start policy is commonly used as a base for all of our customers and it can be applied using our Policy Editor Import Template function. It was chosen as it's our most common use case. The application being elevated is a dummy application exe, which we’ve created specifically for this testing and it terminates quickly and doesn't have a UI, making it ideal for the testing scenario.

Results

Listed below are the results from the runs of the tests on 24.3 and our previous release, 24.1. Due to the nature of our product, we are very sensitive to the OS and general computer activity, so some fluctuation is to be expected.

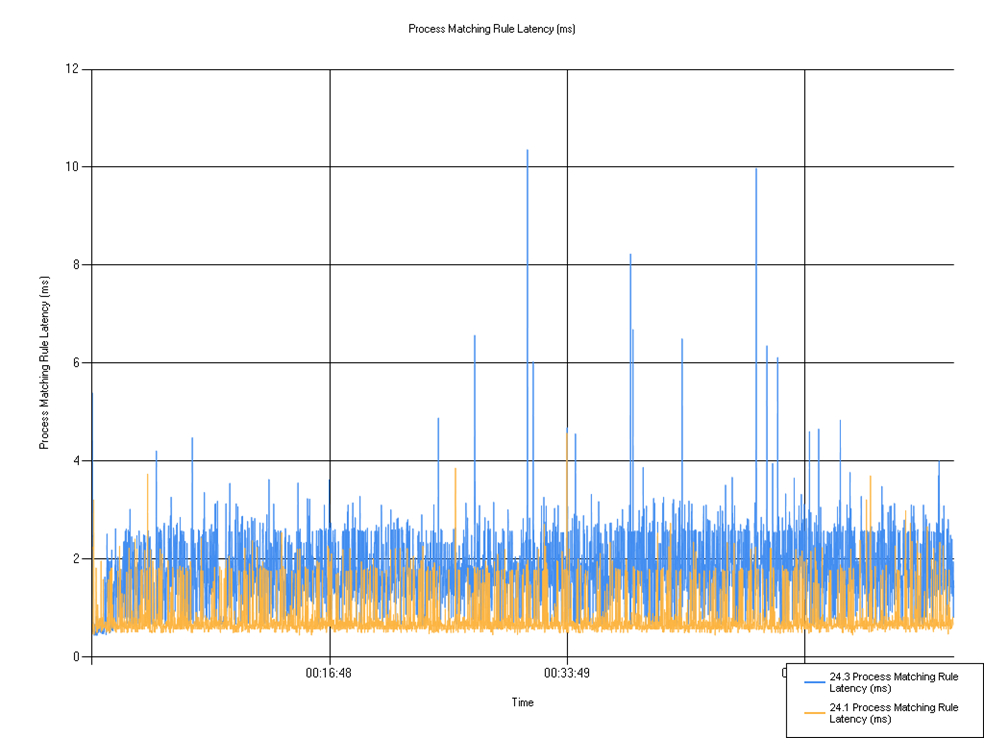

Rule matching latency

Shows the time taken for the rule to match. A small increase in mean and max latency was observed, but this change in performance should not affect customers in an significant way as it is caused by small variations in the tests that we perform.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.3 Process Matching Rule Latency (ms) | 1.74 | 0.45 | 10.36 |

| 24.1 Process Matching Rule Latency (ms) | 0.80 | 0.45 | 4.57 |

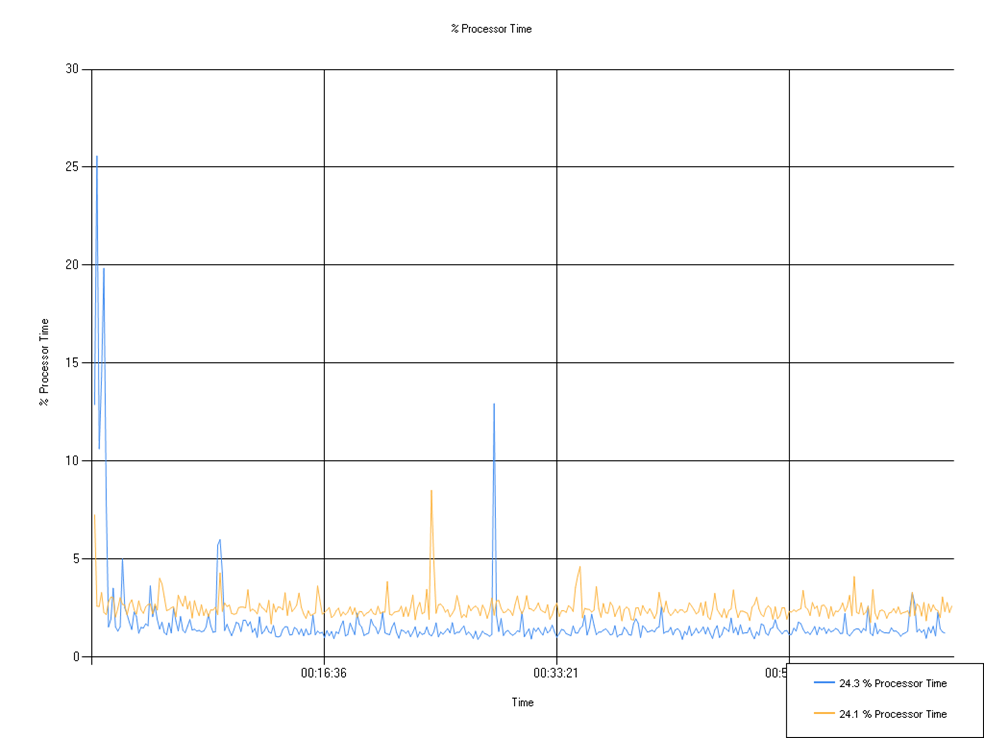

% Processor Time

Percentage of processor time used. A small decrease in mean processor time along with a small spike of maximum CPU Utilization was observed due to the nature of background tasks being performed by Windows.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.3 % Processor Time | 1.73 | 0.92 | 25.58 |

| 24.1 % Processor Time | 2.52 | 1.70 | 8.52 |

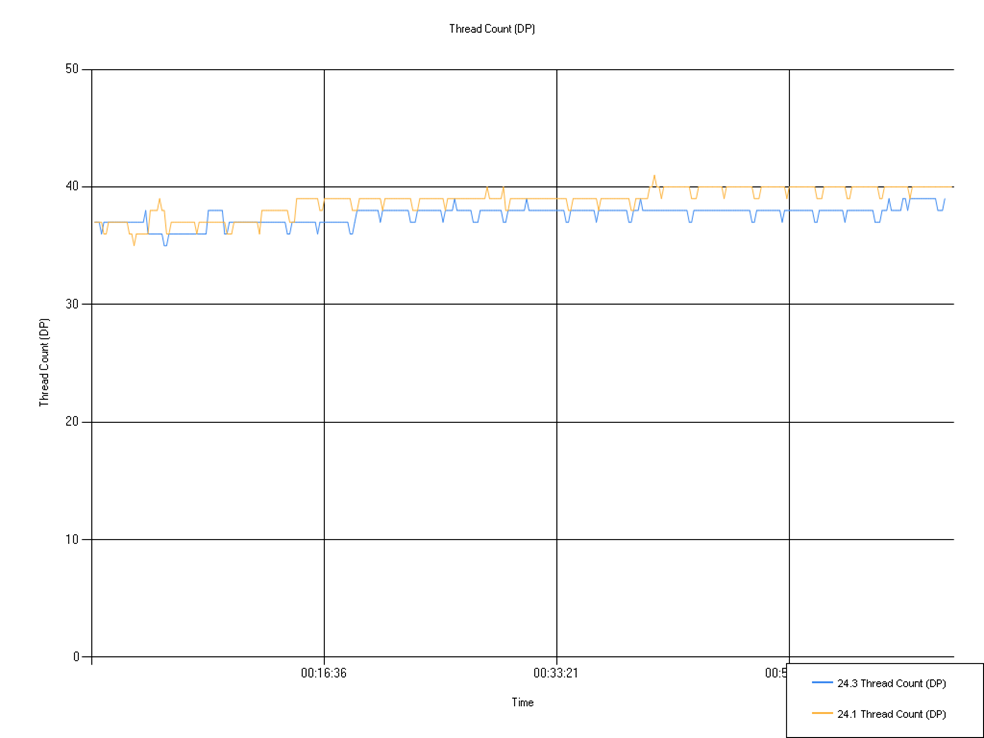

Thread Count (Defendpoint)

Number of threads being used by Defendpoint. There was a small decrease in thread count between 24.1 and 24.3.

| Series | Mean | Min | Max |

|---|---|---|---|

| 24.3 Thread Count (DP) | 37.60 | 35.00 | 39.00 |

| 24.1 Thread Count (DP) | 38.80 | 35.00 | 41.00 |

Memory testing

For each release, we run through a series of automation tests (covering Application control, token modification, DLL control and Event auditing) using a build with memory leak analysis enabled to ensure there are no memory leaks. We use Visual Leak Detector (VLD) version 2.51 which when enabled at compile time replaces various memory allocation and deallocation functions to record memory usage. On stopping a service with this functionality an output file is saved to disk, listing all leaks VLD has detected. We use these builds with automation so that an output file is generated for each test scenario within a suite.

The output files are then examined by a developer who will review the results looking for anything notable. Due to the number of automation tests, test suites of impacted features are identified and ran. If nothing concerning is found then the build is continued to production.

In the 24.3 release, our testing uncovered no concerns.

EPM-W 24.1 performance report

The aim of this document is to provide data on agreed performance metrics of the EPM-W desktop client compared to the previous release.

The content of this document should be used to provide general guidance only. There are many different factors in a live environment which could show different results such as hardware configuration, Windows configuration and background activities, 3rd party products, and the nature of the EPM policy being used.

Performance benchmarking

Test scenario

Tests are performed on dedicated VMs hosted in our data center. Each VM is configured with:

- Windows 10 21H2

- 4 Core 3.3GHz CPU

- 8GB RAM

Testing was performed using the GA release of 24.1

Test name

Quick Start policy with a single matching rule.

Test method

This test involves a modified QuickStart policy where a single matching rule is added which auto elevates an application based on its name. Auditing is also turned on for the application. The application is trivial command line app and is executed once per second for 60 minutes. Performance counters and EPM-W activity logging are collected and recorded by the test and this data is available as an archive which should be attached to this report.

Results

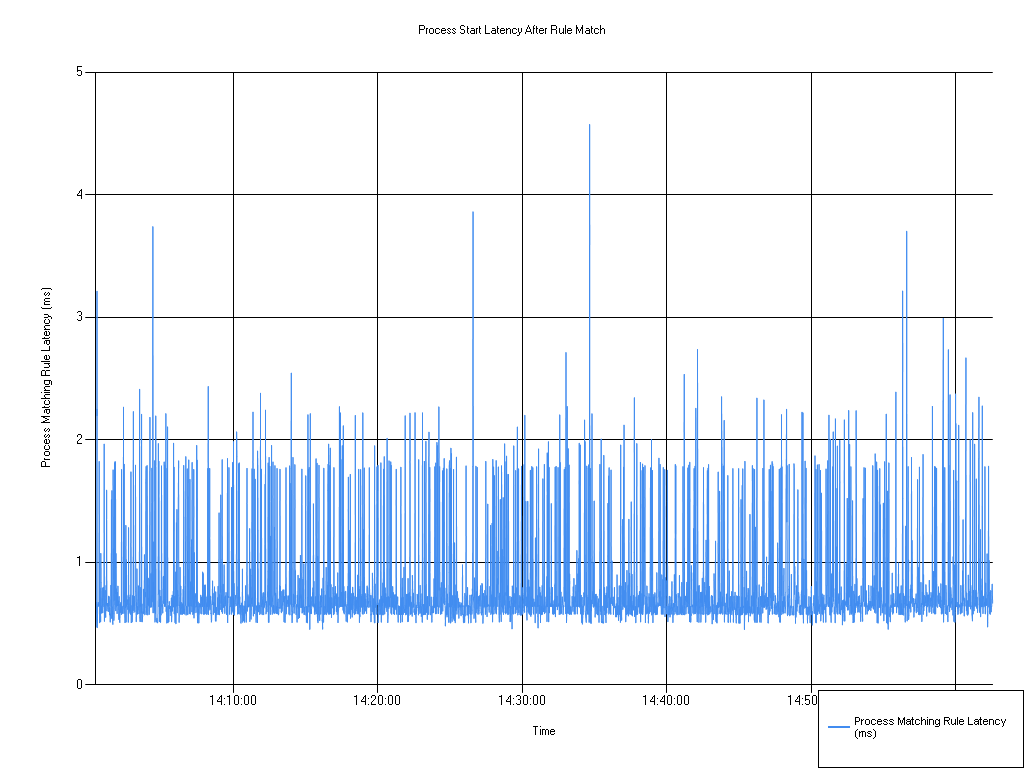

Process start latency after rule match

| Series | Mean | Min | Max |

|---|---|---|---|

| Process Matching Rule Latency (ms) | 0.80 | 0.45 | 4.57 |

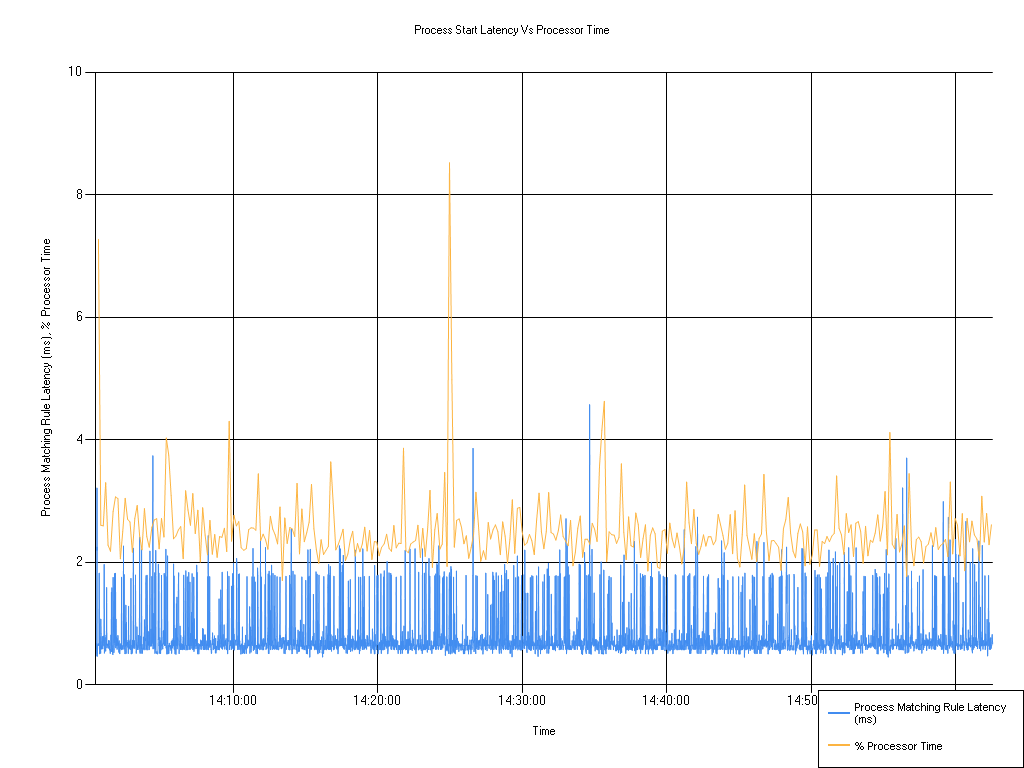

Process start latency vs. processor time

| Series | Mean | Min | Max |

|---|---|---|---|

| Process Matching Rule Latency (ms) | 0.80 | 0.45 | 4.57 |

| % Processor Time | 2.52 | 1.70 | 8.52 |

CPU user/system time

| Series | Mean | Min | Max |

|---|---|---|---|

| % User Time | 1.01 | 0.31 | 4.58 |

| % Privileged Time | 1.50 | 0.89 | 4.08 |

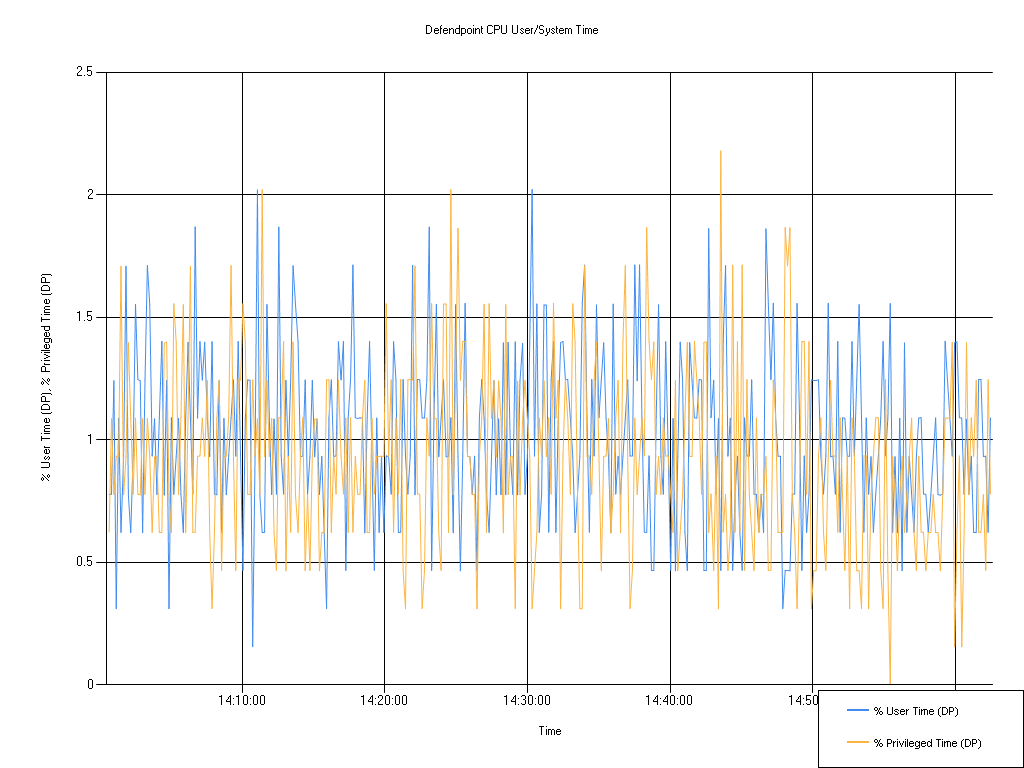

Defendpoint CPU user/system time

| Series | Mean | Min | Max |

|---|---|---|---|

| % User Time (DP) | 1.02 | 0.16 | 2.02 |

| % Privileged Time (DP) | 0.93 | 0.00 | 2.18 |

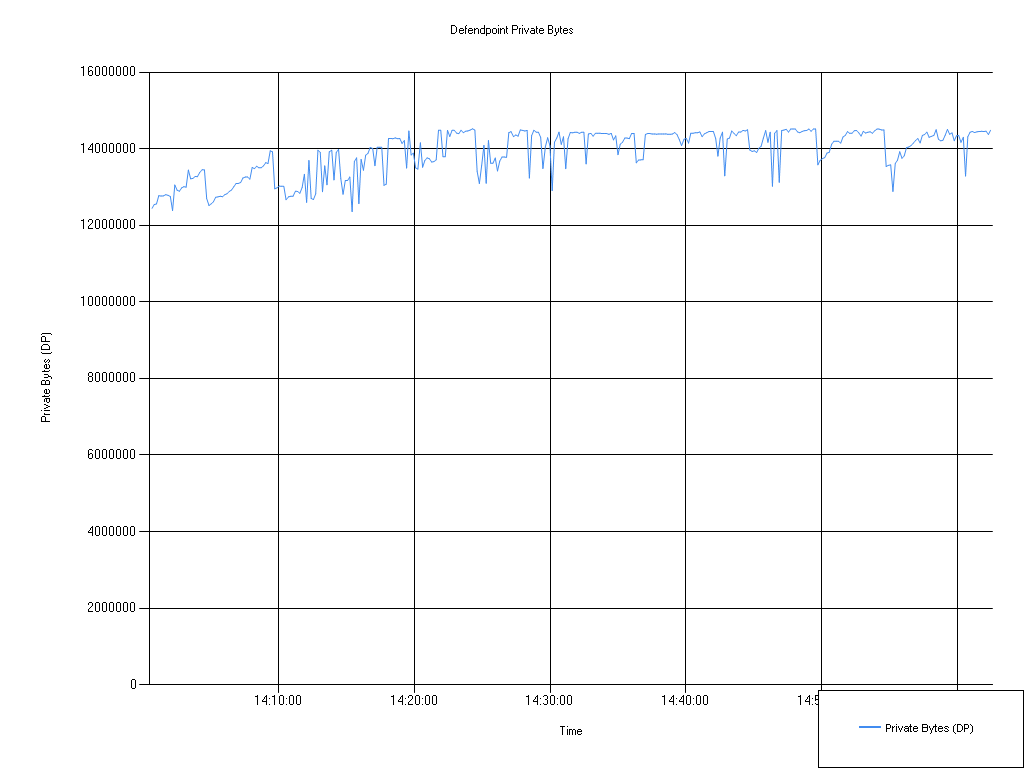

Defendpoint private bytes

| Series | Mean | Min | Max |

|---|---|---|---|

| Private Bytes (DP) | 13,905,599.00 | 12,357,630.00 | 14,520,320.00 |

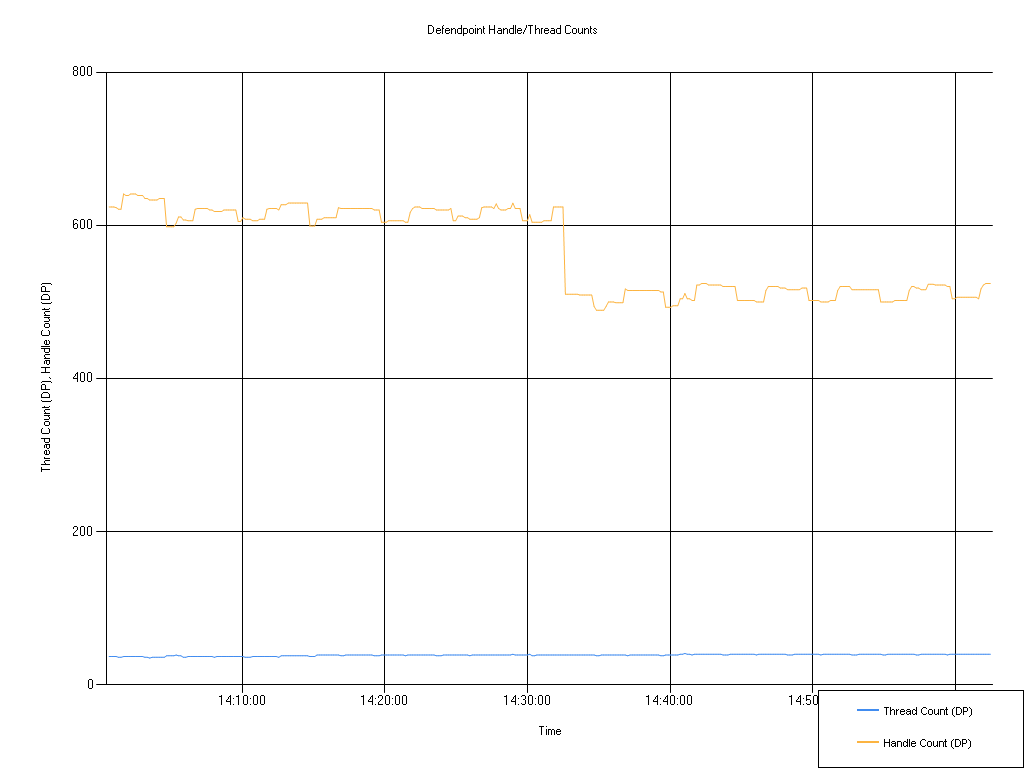

Defendpoint handle/thread counts

| Series | Mean | Min | Max |

|---|---|---|---|

| Thread Count (DP) | 38.80 | 35.00 | 41.00 |

| Handle Count (DP) | 565.84 | 489.00 | 641.00 |

Updated about 1 month ago