Configure Elasticsearch or Logstash reporting | AD Bridge

Integrate the AD Bridge reporting component with Elasticsearch or Logstash. The BTEventReaper service can send events to Elasticsearch or Logstash in addition to SQL Server and BeyondInsight.

ImportantEither Elasticsearch or Logstash can be configured, but not both.

BeyondTrust provides a common mapping for Elastic Common Scheme (ECS) that allows customers to search across events from ADB, BIUL, and PMUL.

Requirements

- Configure a Group Policy with user monitor and event forwarder enabled.

- AD Bridge collectors must be installed.

- An existing Logstash or Elasticsearch environment must already be configured. Credentials are required to connect to the Elasticsearch or Logstash server.

Run the Reporting Database Connection Manager:

-

From the Start menu, select Reporting Database Connection Manager.

Alternatively, you can also run the tool from the command line:

C:\Program Files\BeyondTrust\PBIS\Enterprise\DBUtilities\ bteventdbreaper /gui

-

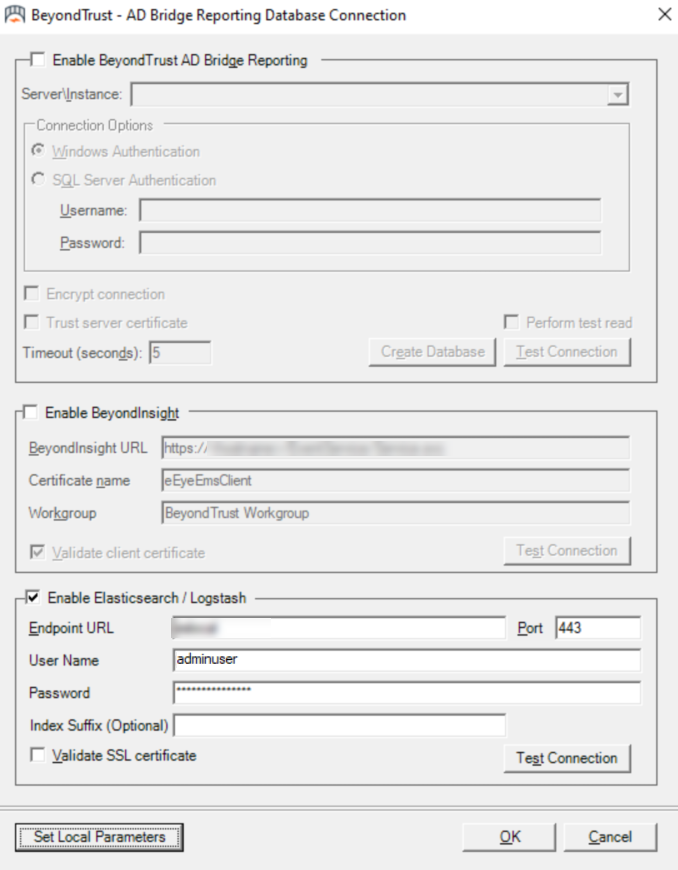

Check the Enable Elasticsearch/Logstash box.

-

Enter the endpoint URL, user name, and password.

-

Enter the port number.

-

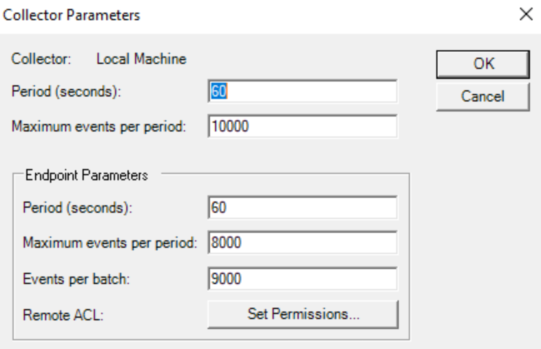

To set the parameters for ElasticSearch, click Set Local Parameters. This option is to set local parameters for ElasticSearch. SQL Server and BeyondInsight get the parameters from the BeyondTrust Management Console.

- Click Test Connection to ensure the connection between the servers is successfully established.

- Click OK.

Index Suffix is an optional field. This sets the index suffix in Elasticsearch/Logstash.

For more information about AD Bridge collectors, see Set up the Collection server.

Configure Logstash for AD Bridge

Use the following template to set up Logstash to work with AD Bridge.

Sample configuration file

In your pipelines .conf file, add the following:

input {

http {

port => [PORT]

codec => json

password => [PASSWORD]

user => [USERNAME]

ssl => true

ssl_certificate => [SSL CERT LOCATION]

ssl_key => [SSL KEY LOCATION]

additional_codecs => { "application/json" => "es_bulk" }

}

}

filter {

}

output {

#On Prem Connection Output

elasticsearch {

hosts => [ [URL/IP] ]

ssl => true

ssl_certificate_verification => true

cacert => [CA CERT LOCATION]

user => [USERNAME]

password => [PASSWORD]

index => "%{[@metadata][_index]}"

}

#Cloud Connection Output

elasticsearch {

cloud_id => [CLOUD ID - Get this from ES]

cloud_auth => [User:Password]

index => "%{[@metadata][_index]}"

}

}

Example> ✅ input { > http { > port => 8080 > codec => json > password => "**\*\***" > user => "username" > ssl => true > ssl_certificate => "/etc/pki/tls/certs/logstash_combined.crt" > ssl_key => "/etc/pki/tls/private/logstash.key" > additional_codecs => { "application/json" => "es_bulk" } > } > } > filter { } output { elasticsearch { hosts => ["https://elasticsever.com:9200"] ssl => true ssl_certificate_verification => true cacert => "/etc/logstash/elasticsearch-ca.pem" user => logstash_user password => password index => "%{[@metadata][_index]}" } elasticsearch { cloud_id => "elasticCloud:fGtdSdffFFerdfg35sDFgsdvsdfhhFRDDFZSQ1YjZiOWYzNDQwNTU0NGRkYmZiYjFjYzMxY2EzNzc5OQ==" cloud_auth => "esUser:yyNrZ4BMcpCkDbW" index => "%{[@metadata][_index]}" } }

Updated 18 days ago